In today's AI infrastructure, storage is often divided between high-performance TLC SSDs and high-capacity QLC SSDs. TLC drives handle tasks like training, fine-tuning, and inference, while QLC SSDs support data ingestion and archiving with cost-efficient density. This role split has become the norm.

But as compute density increases—especially with modern GPU deployments—TLC SSDs are taking on more than just the “hot tier.” Memblaze's PBlaze7 7940 PCIe 5.0 SSD exemplifies this shift.

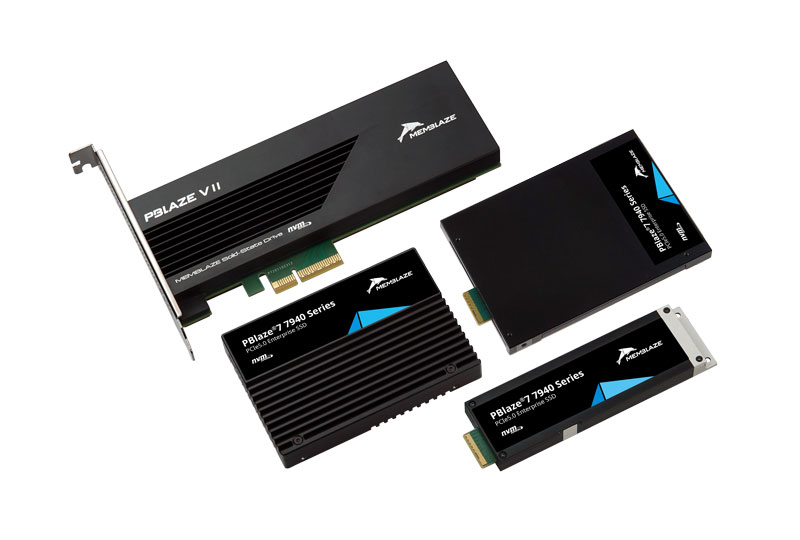

Among the world's first PCIe 5.0 enterprise SSDs, the PBlaze7 7940 delivers up to 14GB/s sequential reads, 10GB/s writes, and up to 30.72TB capacity—all while consuming just 16W in typical read scenarios. It challenges the long-standing belief that you must compromise performance for capacity, or power for performance.

The PBlaze7 7940 has already seen widespread adoption by leading AI and internet companies, with hundreds of thousands of units deployed. It stands out as a TLC SSD capable of serving multiple roles across the AI workflow.

AI pipelines begin with massive volumes of data—from endpoints, sensors, and devices. Centralized preprocessing often creates network bottlenecks and delays.

A better approach is preprocessing at the edge—closer to where data is generated. Here, the PBlaze7 7940's E1.S form factor, up to 15.36TB capacity, and TLC endurance offer distinct advantages. Compared to QLC drives, it delivers better performance under write-heavy workloads and supports nearly 1PB in a 2U edge server—ideal for real-time data filtering, formatting, and staging before model ingestion.

Training and fine-tuning models requires sustained, fast access to large datasets. Frequent checkpoints and constant data streaming can quickly expose I/O limits.

With 30.72TB of storage, up to 14GB/s read, 9.7GB/s write, and 2.7 million random read IOPS, the PBlaze7 7940 ensures training pipelines remain unblocked. It provides both the throughput and capacity density needed to feed modern GPU clusters efficiently.

In multi-turn inference or long-context workloads, large SSDs are essential for KV Cache offloading and quick retrieval—reducing recomputation and latency. The 7940's massive capacity minimizes the number of drives required, freeing PCIe lanes for more GPUs or faster networking.

From edge-side preprocessing to high-speed training and inference, the PBlaze7 7940 series delivers a rare combination of speed, capacity, and efficiency in a single TLC SSD. It extends TLC's relevance into roles once dominated by QLC—reshaping expectations across the AI storage stack.

As AI continues to scale, full-featured PCIe 5.0 SSDs like the PBlaze7 7940 are proving essential for building faster, denser, and more efficient infrastructure—pushing TLC SSDs beyond their traditional boundaries.